关闭防火墙 sudo ufw disable

前置知识:

- docker: 清华源下载docket-ce

- containerd:https://containerd.io/downloads/

apt install constanerd

containerd设置代理

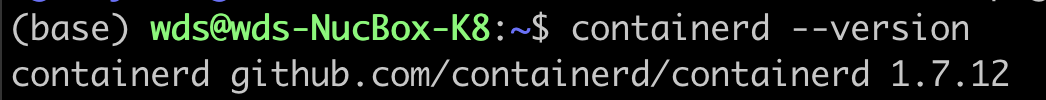

我的版本是这样的,

containerd启动中,可能会遇到BoltDB的问题

ls -l /var/lib/containerd/io.containerd.metadata.v1.bolt/meta.db

# 重新初始化 BoltDB

sudo rm -rf /var/lib/containerd/io.containerd.metadata.v1.bolt/meta.db

sudo systemctl restart containerd

这里以通过 systemd 安装的 containerd 为例。

containerd 的配置一般位于 /etc/containerd/config.toml 下,service 文件位于:/lib/systemd/system/containerd.service 配置 Proxy 可以通过 service 环境变量方式配置,具体如下:

修改 /etc/containerd/config.toml 文件

# 1

# 阿里云镜像

sandbox_image = "registry.k8s.io/pause:3.8"

改为

sandbox_image = "registry.aliyuncs.com/k8sxio/pause:3.8"

# 2

SystemdCgroup = false

修改为

SystemdCgroup = true

# 3

# 配置镜像加速

# 上下级配置,缩进两个空格

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://bqr1dr1n.mirror.aliyuncs.com"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."k8s.gcr.io"]

endpoint = ["https://registry.aliyuncs.com/k8sxio"]

创建或编辑文件:/lib/systemd/system/containerd.service.d/http-proxy.conf

[Service]

Environment="HTTP_PROXY=http://127.0.0.1:7890"

Environment="HTTPS_PROXY=http://127.0.0.1:7890"

Environment="NO_PROXY=localhost,127.0.0.0/8,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,.svc,.cluster.local,.ewhisper.cn,<nodeCIDR>,<APIServerInternalURL>,<serviceNetworkCIDRs>,<etcdDiscoveryDomain>,<clusterNetworkCIDRs>,<platformSpecific>,<REST_OF_CUSTOM_EXCEPTIONS>"

这里有个推荐 NO_PROXY 配置:

- 本地地址和网段:

localhost和127.0.0.1或127.0.0.0/8 - Kubernetes 的默认域名后缀:

.svc和.cluster.local - Kubernetes Node 的网段甚至所有应该不用 proxy 访问的 node 网段:

<nodeCIDR> - APIServer 的内部 URL:

<APIServerInternalURL> - Service Network:

<serviceNetworkCIDRs> - (如有)etcd 的 Discovery Domain:

<etcdDiscoveryDomain> - Cluster Network:

<clusterNetworkCIDRs> - 其他特定平台相关网段(如 DevOps, Git/制品仓库。..):

<platformSpecific> - 其他特定 NO_PROXY 网段:

<REST_OF_CUSTOM_EXCEPTIONS> - 常用内网网段:

10.0.0.0/8172.16.0.0/12192.168.0.0/16

系统设置代理

sudo vim ~/.bashrc

...

function setproxy() {

export http_proxy=proxy.i2ec.top:21087

export https_proxy=proxy.i2ec.top:21087

export no_proxy=*.i2ec.top,*.local,localhost,127.0.0.1,127.0.0.0/8,172.16.0.0/12,10.0.0.0/8,192.168.0.0/16

}

function unsetproxy() {

unset http_proxy

unset https_proxy

unset no_proxy

}

# setproxy()

export PATH="$PATH:$HOME/tools/bin"

alias src="source ~/.bashrc"

alias k=kubectl

alias kg="kubectl get"

alias kd="kubectl describe"

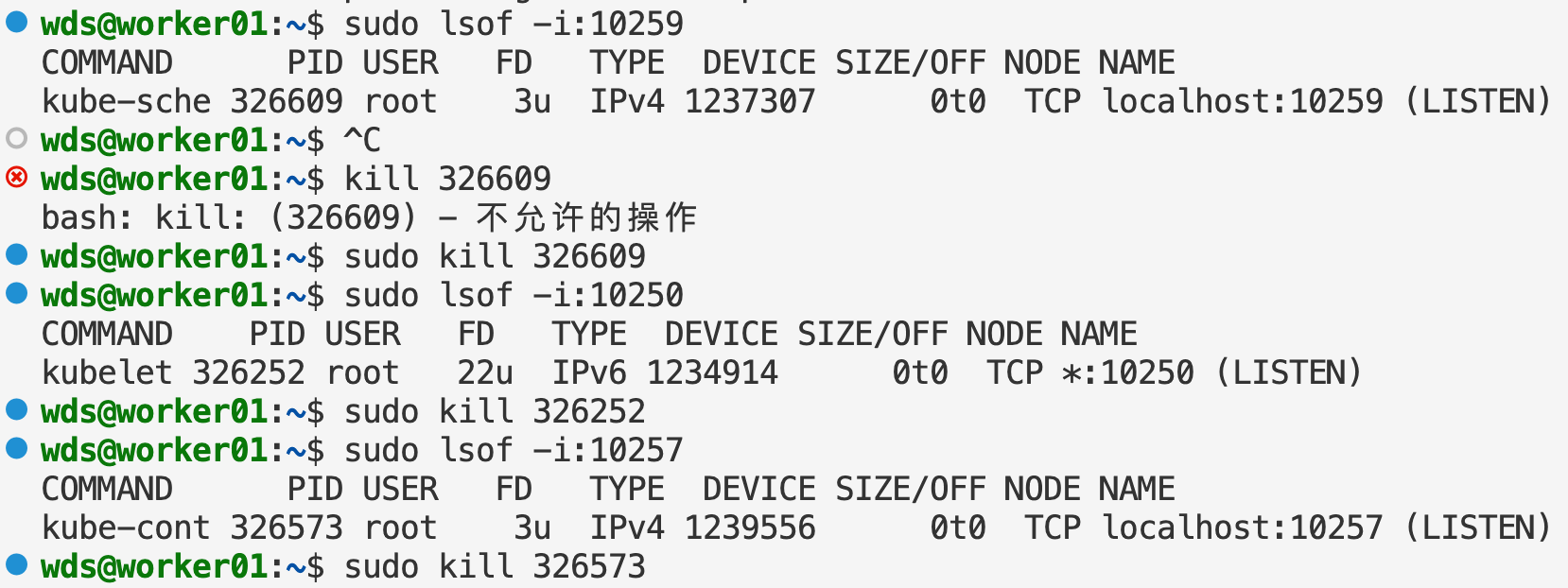

如果你一开始安装过k8s,并且有原来的文件残留,你可以用这个过程把它的文件先删光

sudo apt remove kubelet kubeadm kubectl

sudo rm -rf .kube/config

sudo rm -rf /etc/kubernetes/

sudo rm -r /etc/cni/net.d/

sudo rm -rf /var/lib/etcd/

sudo rm -rf /var/lib/kubelet/

可以用清华源,也可以用阿里源,这里使用阿里云的镜像构建 k8s

sudo apt-get update && sudo apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

之后将阿里云的镜像地址写到 sources.list 当中

sudo vim /etc/apt/sources.list.d/kubernetes.list

# 写入下列内容

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

退出后更新软件包,下载 k8s

sudo apt-get update

sudo apt-get install -y kubelet=1.27.0-00 kubeadm=1.27.0-00 kubectl=1.27.0-00

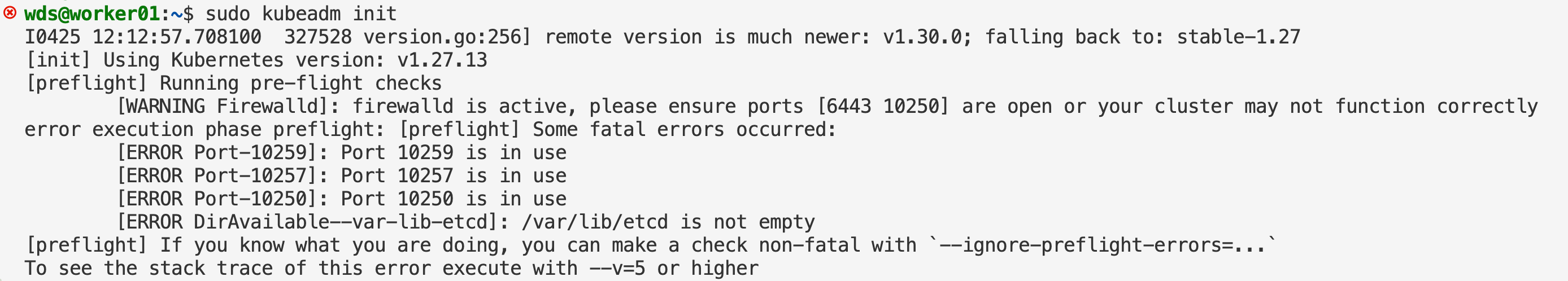

初始化k8s

这次初始化遇到三个问题

首先是 [WARNING Swap]: swap is enabled; production deployments should disable swap unless testing the NodeSwap feature gate of the kubelet

需要把虚拟存储交换关掉

(base) wds@wds-NucBox-K8:~$ sudo swapoff -a

(base) wds@wds-NucBox-K8:~$ sudo vim /etc/fstab

(base) wds@wds-NucBox-K8:~$ sudo mount -a

(base) wds@wds-NucBox-K8:~$ free -h

total used free shared buff/cache available

内存: 28Gi 4.6Gi 532Mi 241Mi 23Gi 23Gi

交换: 0B 0B 0B

然后是 [ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist,这是之前配置的 br_netfilter 没有启动,运行下面的命令即可。

(base) wds@wds-NucBox-K8:~$ sudo modprobe br_netfilter

(base) wds@wds-NucBox-K8:~$ echo 1 | sudo tee /proc/sys/net/bridge/bridge-nf-call-iptables

1

这个问题同理 [ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

(base) wds@wds-NucBox-K8:~$ echo 1 | sudo tee /proc/sys/net/ipv4/ip_forward

1

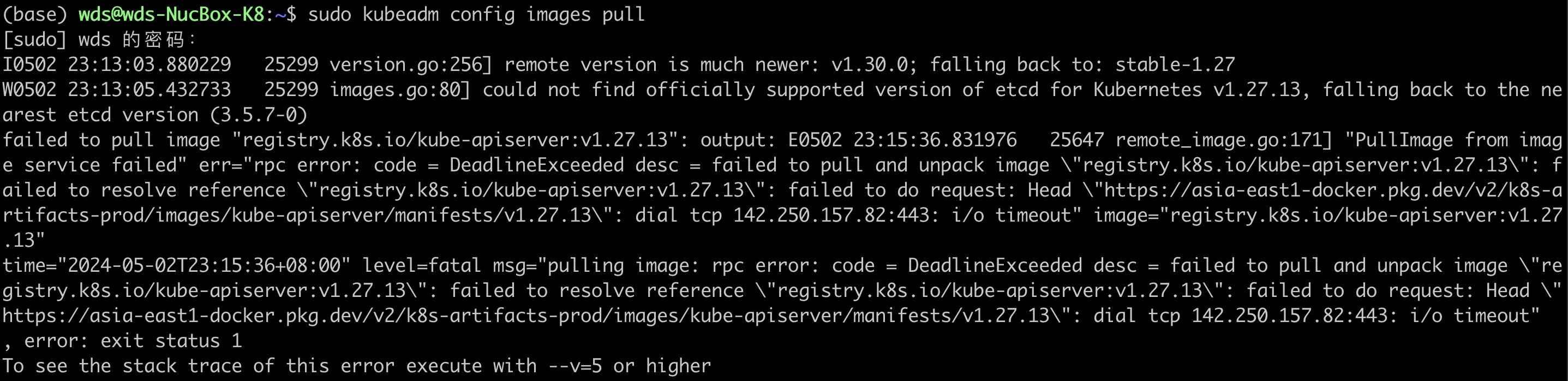

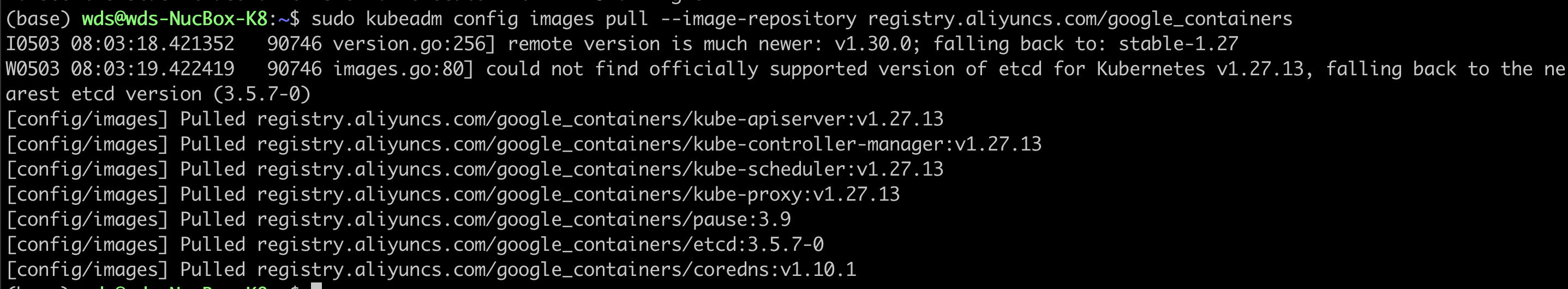

然后可以先拉取镜像

(base) wds@wds-NucBox-K8:~$ sudo kubeadm config images list

I0503 08:02:18.239000 90461 version.go:256] remote version is much newer: v1.30.0; falling back to: stable-1.27

W0503 08:02:19.105381 90461 images.go:80] could not find officially supported version of etcd for Kubernetes v1.27.13, falling back to the nearest etcd version (3.5.7-0)

registry.k8s.io/kube-apiserver:v1.27.13

registry.k8s.io/kube-controller-manager:v1.27.13

registry.k8s.io/kube-scheduler:v1.27.13

registry.k8s.io/kube-proxy:v1.27.13

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.7-0

registry.k8s.io/coredns/coredns:v1.10.1

(base) wds@wds-NucBox-K8:~$ sudo kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers

若是遇到下面的问题

你可以用我上面的命令,用--image-repository option来指定拉去的仓库

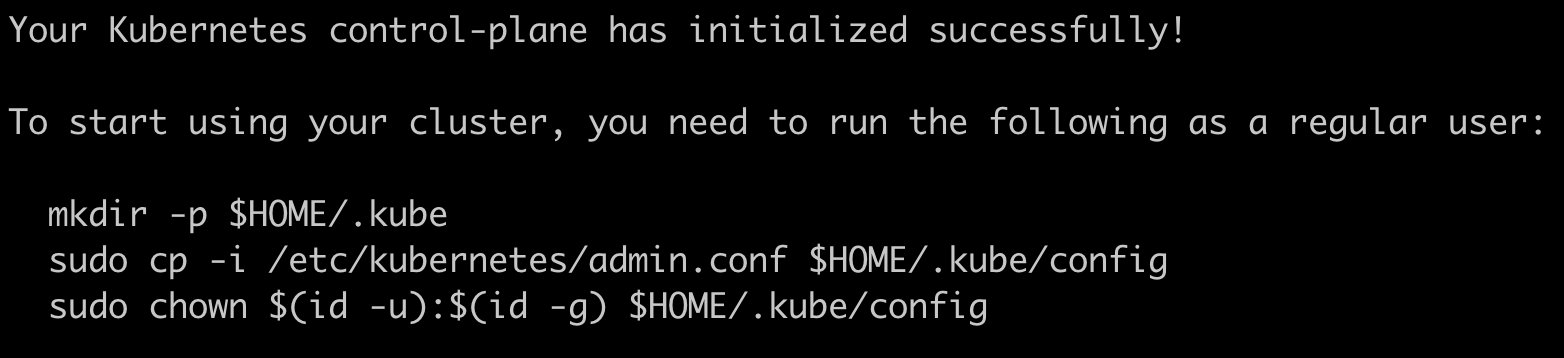

然后初始化 kubeadm init

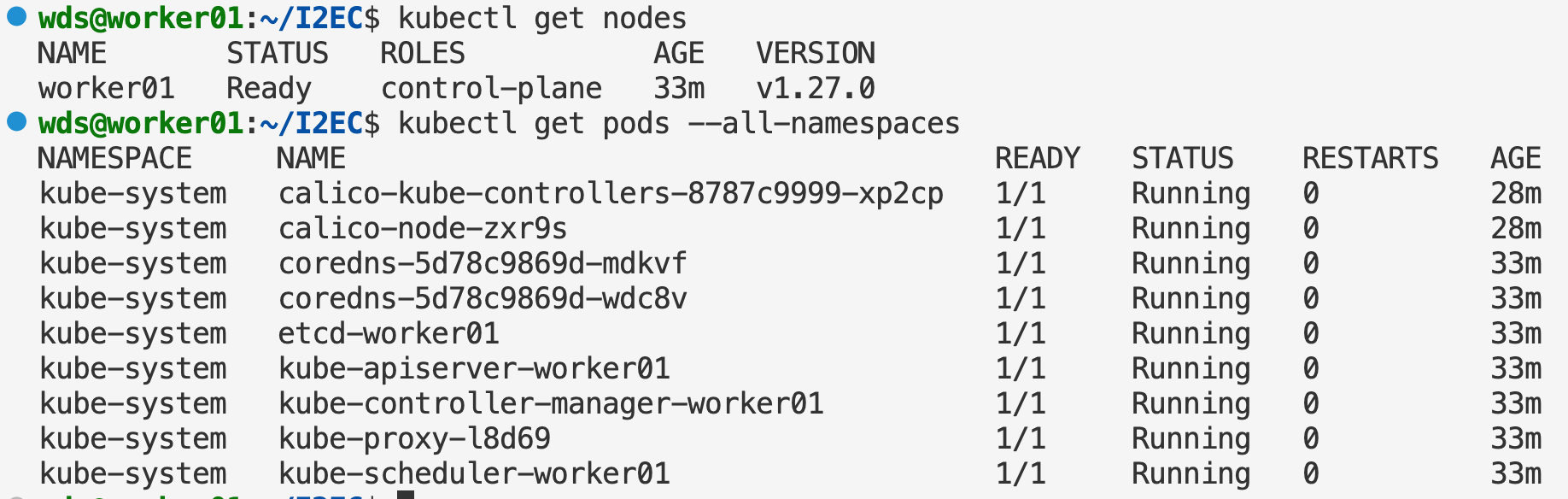

安装网络插件calico

可能会用到 TIFERA

下面给出 calico.yaml,需要的可以直接 copy

kubectl apply -f calico.yaml

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

修改containd的网络配置

sudo vim /etc/cni/net.d/10-containerd-net.conflist

从 An example containerd configuration file copy 相关的内容

{

"cniVersion": "1.0.0",

"name": "containerd-net",

"plugins": [

{

"type": "bridge",

"bridge": "cni0",

"isGateway": true,

"ipMasq": true,

"promiscMode": true,

"ipam": {

"type": "host-local",

"ranges": [

[{

"subnet": "10.88.0.0/16"

}],

[{

"subnet": "2001:db8:4860::/64"

}]

],

"routes": [

{ "dst": "0.0.0.0/0" },

{ "dst": "::/0" }

]

}

},

{

"type": "portmap",

"capabilities": {"portMappings": true},

"externalSetMarkChain": "KUBE-MARK-MASQ"

}

]

}

sudo systemctl restart containerd.service

等待,安装完成

kubectl get nodes worker01 -o yaml | code -

搜索 taint

然后把污点删除 kubectl taint node worker01 node-role.kubernetes.io/control-plane-

查看cpu架构

ARCH=$(uname -s | tr A-Z a-z)-$(uname -m | sed 's/x86_64/amd64/') || windows-amd64.exe

echo ${ARCH}

Resources